Optimizing Linux's network stack

Background

In today’s world of distributed computing, network is the backbone of high-performance, low-latency enterprise applications. Understanding and optimizing the Linux network stack is crucial for achieving peak system performance.

Here’s a breakdown of the key components:

Network Components:

Protocols:

For insights on optimizing application-level protocols, check out my previous posts: :

- REST Client with desired NFRs using Spring RestTemplate

- Implementing Performant and Optimal Spring WebClient

- RSocket Vs Webflux - Performance Comparison

In this article, we’ll focus on optimizing the network at the kernel level. But first, let’s explore how data flows within the Linux kernel when a client application invokes an API exposed by a downstream system.

Overview of Linux Networking Stack

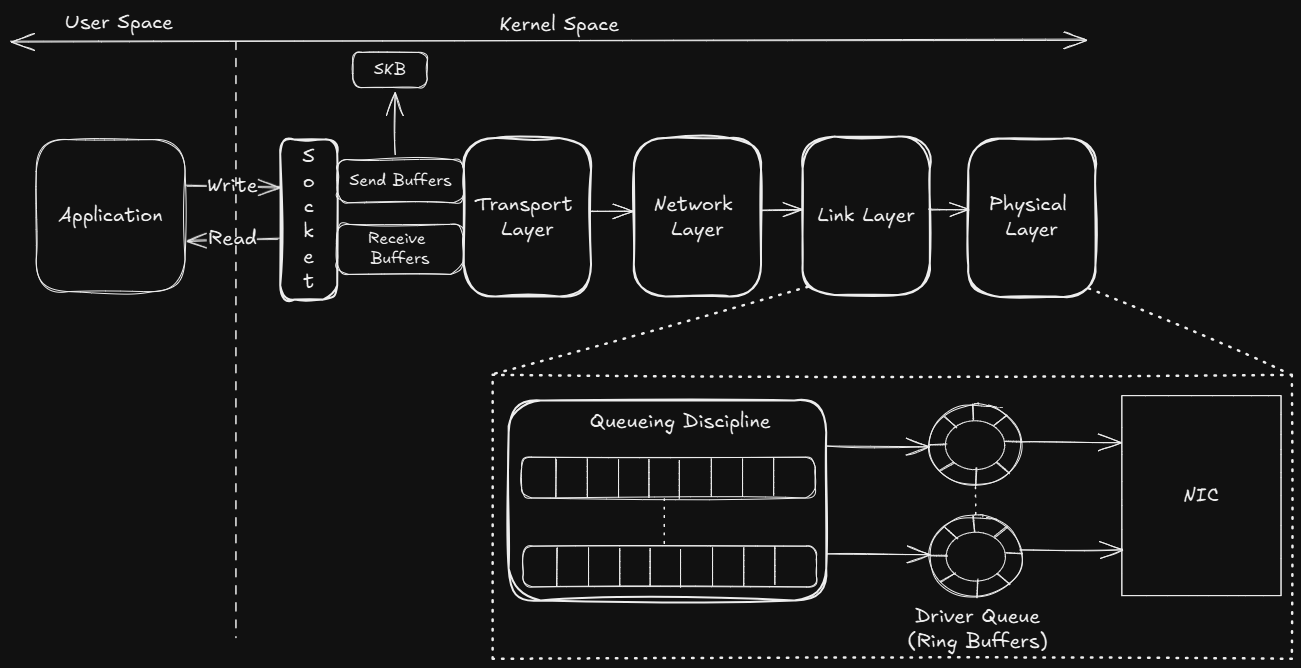

The journey of sender application's data to the NIC's interface begins in user space, where an application generates data to be transmitted over the network. This data is transferred to the kernel space through a system call via Socket's Send Buffers, as a struct sk_buff (socket buffer i.e. SKB) - a data type that holds the data and its associated metadata. The SKB then traverses the transport, network, and link layers, where relevant headers for protocols like TCP/UDP, IPv4, and MAC are added.

Link layer mainly comprises of queueing discipline (qdiscs). qdiscs operate as a parent-child hierarchy, allowing multiple child qdiscs to be configured under a parent qdisc. Depending on priority, the qdiscs determines when the packet is to be forwarded to the driver queue (a.k.a Ring Buffer). Finally, the NIC reads the packets and eventually deque them on wire.

Optimizable Areas

- TCP Connection Queues

In order to manage inbound connection, Linux uses 2 types of backlog queues -- SYN Backlog - For incomplete transactions during TCP handshake

- Listen Backlog - For established sessions that are waiting to be accepted by application

Length of both these queues can be tuned independently using below properties

1# Manages length of SYN Backlog Queue

2net.ipv4.tcp_max_syn_backlog = 4096

3

4# Manages length of Listen Backlog Queue

5net.core.somaxconn = 1024

- TCP Buffering

By managing size of send and receive buffers of socket, data throughput can be tweaked. One needs to be mindful of the fact that large sized buffers guarantees throughput but that gain is at the cost of more memory spent per connection

Read and Write buffers of TCP can be managed via -

1# Defines the minimum, default, and maximum size (in bytes) for the TCP receive buffer

2net.ipv4.tcp_rmem = 4096 87380 16777216

3

4

5# Defines the minimum, default, and maximum size (in bytes) for the TCP send buffer

6net.ipv4.tcp_wmem = 4096 65536 16777216

7

8# Enables automatic tuning of TCP receive buffer sizes

9net.ipv4.tcp_moderate_rcvbuf = 1

- TCP Congestion Control

Congestion control algorithms play a vital role in managing TCP based network traffic, ensuring efficient data transmission, and maintaining network stability

It can be set using

1# Sets cubic as congestion control algorithm. CUBIC is a modern algorithm designed to perform better in high bandwidth and high latency networks

2net.ipv4.tcp_available_congestion_control = cubic

- Other TCP Options

Other TCP parameters that can be tweaked are -

1# Enables TCP Selective Acknowledgement. Helps in maintaining high throughput along with reduced latency

2net.ipv4.tcp_sack = 1

3

4# Enables TCP Forward Acknowledgement. Helps in faster recovery from multiple packet losses within a single window of data, improving overall TCP performance

5net.ipv4.tcp_fack = 1

6

7# Allows reuse of sockets in the TIME-WAIT state for new connections. This can help in reducing the latency associated with establishing new connections, leading to faster response times

8net.ipv4.tcp_tw_reuse = 1

9

10# Disables fast recycling of TIME-WAIT sockets. Helps maintain stability and reliability of TCP connections, ensuring that connections are properly closed and all packets are accounted for before the socket is reused

11net.ipv4.tcp_tw_recycle = 0

- Queueing Disciplines (qdiscs)

qdiscs are algorithms for scheduling and managing network packets

Conclusion

So we understood how data packet gets transmitted from an application to NIC. And while understanding the flow, we got a bird's eye view of key Linux Kernel components that play a major role from data transmission standpoint.

Since network is one of the most often blamed component for poor performance of application, we also had a cursory overview of various properties that can be tweaked to optimize its performance.

In case you have experience in optimizing network at OS level with above or any other properties, feel free to share within comments!