Optimizing Kafka Producers and Consumers

Background

In this current era, Distributed Architecture has become de-facto architectural paradigm, which necessitates implementation of loosely coupled Microservices which would talk with each other via

As far as Message Oriented Middleware is concerned, Apache Kafka has become quite ubiquitous in today's world of Distributed Systems. Apache Kafka is a powerful, distributed, replicated messaging service platform that is mainly responsible for storing and sharing data in a scalable, robust and fault tolerant manner. From application standpoint, application developers mainly leverage Kafka Producer and Kafka Consumer to mainly publish and consume messages. So both Producer and Consumer assumes lot of importance for optimizing Kafka based interactions.

Primary focus of this article will be to understand ways to improve performance of Kafka Producers and Consumers. Performance Engineering as a whole has two orthogonal dimensions -

- Throughput

- Latency

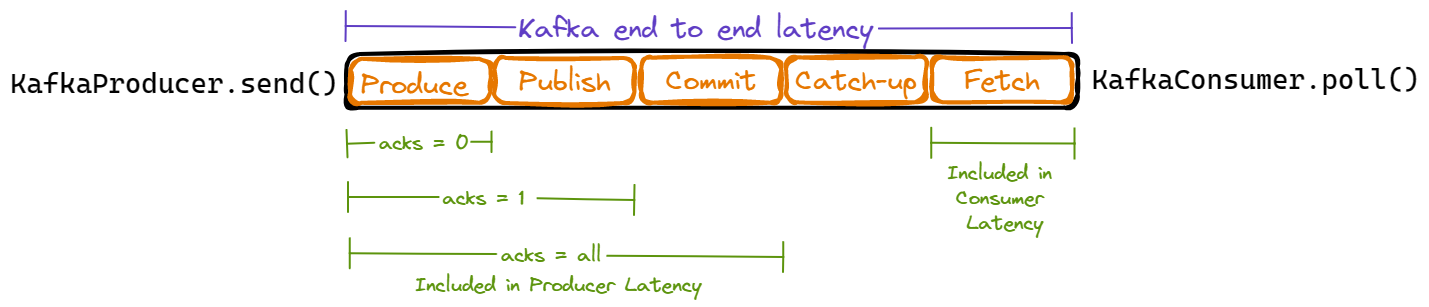

Kafka end to end latency

Kafka end to end latency is the amount of time spent between an application publishing a record via KafkaProducer.send() and application consuming the published record via KafkaConsumer.poll(). Below image clearly indicates various phases Kafka record undergoes

- Produce Time - Time elapsed between the point at which application publishes a record via KafkaProducer.send() and the point at which published record is sent to leader broker of topic partition.

- Publish Time - Time elapsed between the point at which Kafka's internal Producer publishes batch of messages to broker and the point at which published messages gets appended to replica log of leader

- Commit Time - Time taken by Kafka to replicate messages to all in-sync replicas

- Catch-up Time - Once message is committed, if Consumer's offset is N messages behind the committed message than Catch-up Time is the time Consumer would take to consume those N messages

- Fetch Time - Time taken by Kafka Consumer to fetch messages from leader broker

Optimization Approach

Typically speaking, data flow through Kafka will involve following actors -

- Producer

- Topic

- Consumer

So we will mainly focus on Producer and Consumer from application optimization standpoint

Optimizing Kafka Producer

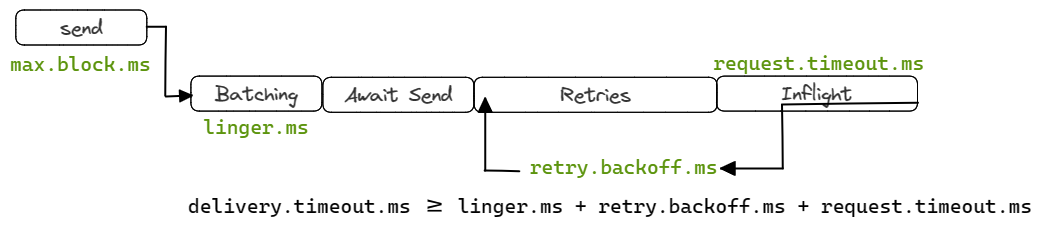

Apart from above phases of Kafka messages, understanding delivery time breakdown of Kafka Producer is equally important from optimization perspective

Key Configurations

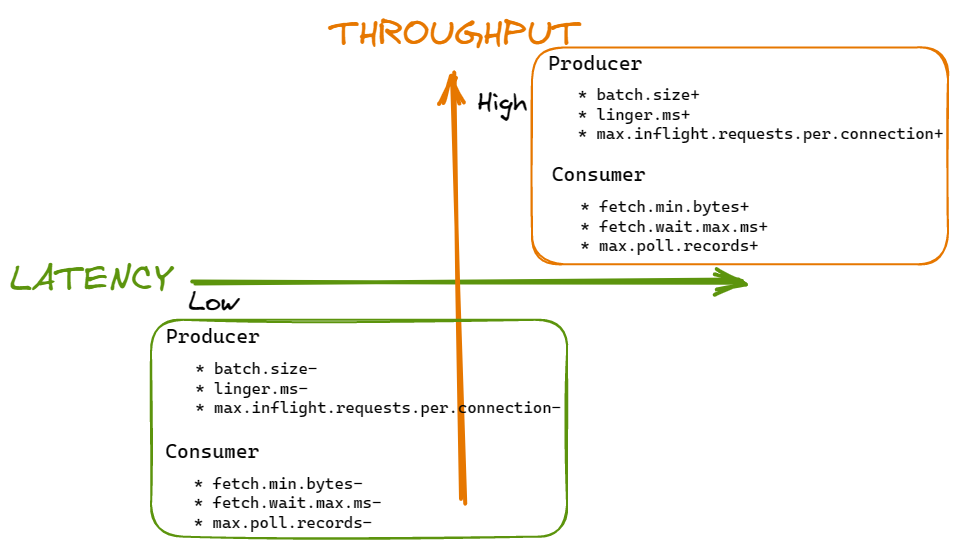

- batch.size - Controls amount of memory (in bytes) that will be used by Producer for each batch. Increasing batch size may improve throughput at the cost of memory footprint of your application. Note - Higher value doesn't mean delay in sending messages

- linger.ms - Defines amount of time (in milliseconds) Producer waits until batch.size messages are available for sending to Broker. Increasing its value will reduce network I/O and thereby ensure higher throughput. However, higher value may increase latency of Producer.

- max.inflight.requests.per.connection - Controls number of message batches Producer will send without receiving responses. Higher value will improve throughput at the cost of memory usage.

Optimizing Kafka Consumer

- fetch.min.bytes - Defines minimum number of bytes Consumer intends to receive from Broker. Lower value will reduce latency at the cost of throughput.

- fetch.wait.max.ms - Defines maximum time Broker will wait before responding to Fetch request from Consumer. Higher value will reduce network I/O and thereby ensure improved throughput at the cost of latency.

- max.poll.records - Controls maximum number of records a single call will return. Reducing its value will reduce latency by sacrificing throughput.

Kafka - Producer Consumer Optimization Axes

Pictorially we can collate above understanding and prepare Kafka Producer Consumer Axes to easily remember key configurations along with its impact on performance of application.

Conclusion

In this article we saw what exactly end to end latency within Kafka is, along with various phases through which a message typically undergoes. Now we clearly understand what phases impacts performance of Kafka Producers and Consumers. It also covered key configurations that can help in reducing latency and increasing throughput of Kafka Producers and Consumers. By understanding impact of these configurations, we can say that there is a tradeoff between high throughput and low latency from performance optimization standpoint. And one can find right balance through experimentation by understanding nature of application (i.e. Throughput / Latency sensitive) and volume of load.

P.S - By default Apache Kafka is configured to favor latency over throughput

comments powered by Disqus